Preface

I started with two vague assumptions driving my Ph.D. First, linked open data can serve as a better infrastructure for online markets. Second, matchmakers operating in this infrastructure can remove some friction from conducting business transactions in the markets, thereby making the market allocation more efficient. What I needed to play with these ideas was a market in which data on both supply and demand is available. Public procurement market offers a feature few other markets have: demands are explicitly represented as data in the form of public procurement notices thanks to their proactive disclosure as open data mandated by law. Moreover, as a domain fraught with numerous data quality issues, public procurement presents a great opportunity to remedy the issues with the technologies of linked data, also known as the semantic web stack. My work began.

I worked with linked open data on and off since 2009. The topic I chose for my Ph.D. thus constituted a natural continuation of my prior efforts. Late in 2010 me and my colleagues started to discuss joining the LOD2 project,1 a 7th Framework Programme EU research project on linked open data, which turned out to be instrumental for my Ph.D. The project set to deliver a software stack for working with linked open data. We proposed to extend and deploy the software for a distributed marketplace of public sector contracts published as linked open data. Matchmaking was conceived as a key functionality operating in this marketplace to yield an economic impact of open data. The LOD2 project funded my work from 2011 to 2014. In 2012, in order to be able to work on the project I enrolled in the University of Economics, Prague (UEP) as a Ph.D. student and set up to pursue the goals of this dissertation. A jigsaw falling into its place.

UEP constituted an appropriate environment to carry out my Ph.D. The chosen dissertation topic was firmly grounded in the research direction of the Department of Information and Knowledge Engineering (DIKE) at UEP where my work was done. My research built on both linked data and data mining, uniting the two principal areas researched at DIKE. Linked data is a pervasive part of my work, manifest in the data preparation as well as in the SPARQL-based matchmakers. Data mining surfaces in the application of tensor factorization via RESCAL for matchmaking. Moreover, the overarching economic objectives motivating my Ph.D. research fit the domain targeted by UEP.

An online version of the dissertation is available at http://mynarz.net/dissertation.

Acknowledgements

I would like to thank my supervisor doc. Ing. Vojtěch Svátek, Dr. for creating an environment in which this research could have been done and for helping me navigate the mazes of academia. Completing this dissertation would also not be feasible without the developers of open source software, whom I want to thank for sharing their work. I am grateful to dr. Tommaso di Noia, who allowed me to visit his group at the Polytechnic University of Bari, Italy. During this internship, Tommaso helped me to clarify the relation of my topic to recommender systems. Vito Claudio Ostuni, then one of Tommaso’s Ph.D. students, suggested looking into case-base reasoning, which provided my work with a useful conceptual framework. My thanks also goes to RNDr. Jakub Klímek, Ph.D. from the Czech Technical University for his help with data preparation, and to PhDr. Ing. Jiří Skuhrovec, Ph.D. from Datlab s.r.o. for supplying me with the zIndex data as well as insights into the public procurement domain. I appreciate Michal Hoftich’s assistance with typesetting in LaTeX and Sarven Capadisli’s help with publishing the dissertation on the Web. A special thanks belongs to my friends and family who helped me stay sane during the long years of my Ph.D. endeavour.

The research presented in this dissertation was partially supported by the EU ICT FP7 project no. 257943 (LOD2 project) and by the H2020 project no. 645833 (OpenBudgets.eu).

1 Introduction

In order for demand and supply to meet, they must learn about each other. Data on demands and supplies thus needs to be accessible, discoverable, and usable. As data grows to larger volumes, its machine-readability becomes paramount, so that machines can make it usable for people, for whom dealing with large data is impractical. Moreover, relevant data may be fragmented across diverse data sources, which need to be integrated to enable their effective use. Nevertheless, when data collection and integration is done manually, it takes a lot of effort.

Some manual effort involved in gathering and evaluation of data about demands and supplies can be automated by matchmaking, as explained further in Section 1.6. Simply put, matchmakers are tools that retrieve data matching a query. In the matchmaking setting, either demands or supplies are cast as queries while the other side is treated as the queried data. The queries produce matches from the queried data ranked by their degree to which they satisfy a given query.

Our work concerns matchmaking of bidders and public contracts. The primary motivation for our research is to improve the efficiency of resource allocation in public procurement by providing better information to the participants of the public procurement market. We employ matchmaking as a way to find information that is useful for the market participants. In the context of public procurement, matchmaking can suggest relevant business opportunities to bidders or recommend to contracting authorities which bidders are suitable to be approached for a given public contract.

1.1 Research topic

Our approach to matchmaking is based on two components: good data and good technologies. We employ linked open data as a method to defragment and integrate public procurement data and enable to combine it with other data. A key challenge in using linked open data is to reuse or develop appropriate techniques for data preparation.

We demonstrate how two generic approaches can be applied to the matchmaking task, namely case-based reasoning and statistical relational learning. In the context of case-based reasoning, we treated matchmaking as top-\(k\) recommendation. We used the SPARQL (Harris and Seaborne 2013) query language to implement this task. In the case of statistical relational learning, we approached matchmaking as link prediction. We used tensor factorization with RESCAL (Nickel et al. 2011) for this task. The key challenges of matchmaking by these technologies involve feature selection or feature construction, ranking by feature weights, and combination functions for aggregating similarity scores of matches. Our work discusses these challenges and proposes novel ways of addressing them.

In order to explore the outlined approaches we prepared a Czech public procurement dataset that links several related open government data sources together, such as the Czech business register or the postal addresses from the Czech Republic. Our work can be therefore considered a concrete use case in the Czech public procurement. Viewed as a use case, our task is to select, combine, and apply the state-of-the-art techniques to a real-world scenario. Our key stakeholders in this use case are the participants in the public procurement market: contracting authorities, who represent the side of demand, and bidders, who represent the side of supply. The stakeholder groups are driven by different interests; contracting authorities represent the public sector while bidders represent the private sector, which gives rise to a sophisticated interplay of the legal framework of public procurement and the commercial interests.

1.2 Goals and methods

Our research goal is to explore matchmaking of public contracts to bidders operating on linked open data. In particular, we want to explore what methods can be adopted for this task and discover the most salient factors influencing the quality of matchmaking, with a specific focus on what linked open data enables. In order to pursue this goal we prepare public procurement linked open data and develop software for matchmaking. Our secondary target implied by our research direction is to test the available implementations of the semantic web technologies for handling linked open data and, if these tools are found lacking, to develop auxiliary tools to support data preparation and matchmaking. These secondary goals were not formulated upfront; we only specified them explicitly as we progressed the pursuit of our primary goal.

In order to be able to deliver on the stated goals, their prerequisites must be fulfilled. Applied research depends on the availability of its building blocks. Our research is built on open data and open-source software. We need public procurement data to be available as open data, as described in Section 1.4.1. The data must be structured in a way from which a semantic description of the data can be created, implying that the data is machine-readable and consistent. Consistency of the data arises from standardization, including the adherence to fixed schemas and code lists. We conceive matchmaking as a high-level task based on many layers of technology. Both our data preparation tools and matchmakers build on open-source components. In the pursuit of our goals we reused and orchestrated a large set of existing open-source software.

We adopt the methods of the design science (Hevner et al. 2004) in our research. We design artefacts, including the Czech public procurement dataset and the matchmakers, and experiment with them to tell which of their variants perform better. Viewed this way, our task is to explore what kinds of artefacts for matchmaking of public contracts to bidders are made feasible by linked open data.

The key question to evaluate is whether we can develop a matchmaker that can produce results deemed useful by domain experts representing the stakeholders. We evaluate the developed matchmakers via offline experiments on retrospective data. In terms of our target metrics, we aim to recommend matches exhibiting both high accuracy and diversity. In order to discover the key factors that improve matchmaking we compare the evaluation results produced by the developed matchmakers in their different configurations.

The principal contributions of our work are the implemented matchmaking methods, the reusable datasets for testing these methods, and generic software for processing linked open data. By using experimental evaluation of these methods we derive general findings about the factors that have the largest impact on the quality of matchmaking of bidders to public contracts.

We need to acknowledge the limitations of our contributions. Our work covers only a narrow fraction of matchmaking that is feasible. The two methods we applied to matchmaking are evaluated on a single dataset. Narrowing down the data we experimented with to one dataset implies a limited generalization ability of our findings. Consequently, we cannot guarantee that the findings would translate to other public procurement datasets. We used only quantitative evaluation with retrospective data, which gives us a limited insight into the evaluated matchmaking methods. A richer understanding of the methods could have been obtained via qualitative evaluation or online evaluation involving users of the matchmakers.

1.3 Outline

We follow a simple structure in this dissertation. This chapter introduces our research and explains both the preliminaries and context in which our work is built as well as surveying the related research (1.7) to position our contributions. The dissertation continues with a substantial chapter on data preparation (2) that describes the extensive effort we invested in pre-processing data for the purposes of matchmaking. In line with the characteristics of linked open data, the key parts of this chapter deal with linking (2.4) and data fusion (2.5). We follow up with a principal chapter that describes the matchmaking methods we designed and implemented (3), which includes matchmaking based on SPARQL (3.2) and on tensor factorization by RESCAL (3.3). The subsequent chapter discusses the evaluation (4) of the devised matchmaking methods by using the datasets we prepared. We experimented with many configurations of the matchmaking methods in the evaluation. In this chapter, we present the results of selected quantitative evaluation metrics and provide interpretations of the obtained results. Finally, the concluding chapter (5) closes the dissertation, summarizing its principal contributions as well as remarking on its limitations that may be addressed in future research.

The contributions presented in this dissertation including the methods and software were authored or co-authored by the dissertation’s author, unless stated otherwise. Both the reused and the developed software is listed in Appendix 6. The abbreviations used throughout the text are collected at the end of the dissertation. All vocabulary prefixes used in the text can be resolved to their corresponding namespace IRIs via http://prefix.cc.

1.4 Linked open data

Linked open data (LOD) is the intersection of open and linked data. It combines proactive disclosure of open data, which is unencumbered by restrictions to access and use, with linked data, which provides a model for publishing semantic structured data on the Web. LOD serves as a fundamental component of our work that enables matchmaking to be executed.

1.4.1 Open data

Open data is data that can be accessed equally by people and machines. Its definition is grounded in principles that assert what conditions data must meet to achieve legal and technical openness. Principles of open data are perhaps best embodied in the Open Definition (Open Knowledge 2015) and the Eight principles of open government data (2007). According to the Open Definition’s summary, “open data and content can be freely used, modified, and shared by anyone for any purpose.”2 The Eight principles of open government data draw similar requirements as the Open Definition and add demands for completeness, primacy, and timeliness.

Open data is particularly prominent in the public sector, since public sector data is subject to disclosure mandated by law. Open data can be a result of either reactive disclosure, such as upon Freedom of Information requests, or proactive disclosure, such as by publishing open data. In case of the EU, disclosure of public sector data is regulated by the directive 2013/37/EU on the re-use of public sector information (EU 2013).

While releasing open data is frequently framed as a means to improve transparency of the public sector, it can also have a positive effect on its efficiency (Access Info Europe and Open Knowledge Foundation 2011, p. 69), since the public sector itself is often the primary user of open data. Using open data can help streamline public sector processes (Parycek et al. 2014, p. 90) and curb unnecessary expenditures (Prešern and Žejn 2014, p. 4). The publication of public procurement data is claimed to improve “the quality of government investment decision-making” (Kenny and Karver 2012, p. 2), as supervision enabled by access to data puts a pressure on contracting authorities to follow fair and budget-wise contracting procedures. Matchmaking public contracts to relevant suppliers can be considered an application of open data that can contribute to better-informed decisions leading to more economically advantageous contracts.

Open data can help balance information asymmetries between participants of public procurement markets. The asymmetries may be caused by clientelism, siloing data in applications with restricted access, or fragmentation of data across multiple sources. Open access to public procurement data can increase the number of participants in procurement, since more bidders can learn about relevant opportunities if they are advertised openly. Even distribution of open data may eventually lead to better decisions of the market participants, thereby increasing the efficiency of resource allocation in public procurement.

Open data addresses two fundamental problems of recommender systems, which apply to matchmaking as well. These problems comprise the cold start problem and data sparseness, which can be jointly described as the data acquisition problem (Heitmann and Hayes 2010). Cold start problem concerns the lack of data needed to make recommendations. It appears in new recommender systems that have yet to acquire users to amass enough data to make accurate recommendations. Open data ameliorates this problem by allowing to bootstrap a system from openly available datasets. In our case, we use open data from business registers to obtain descriptions of business entities that have not been awarded a contract yet, in order to make them discoverable for matchmaking. Data sparseness refers to the share of missing values in a dataset. If a large share of the matched entities is lacking values of the key properties leveraged by matchmaking, the quality of matchmaking results deteriorates. Complementary open datasets can help fill in the blank values or add extra features (Di Noia and Ostuni 2015, p. 102) that improve the quality of matchmaking.

The hereby presented work was done within the broader context of the OpenData.cz3 initiative. OpenData.cz is an initiative for a transparent data infrastructure of the Czech public sector. It advocates adopting open data and linked data principles for publishing data on the Web. Our contributions described in Section 2 enhance this infrastructure by supplying it with more open datasets and improving the existing ones.

1.4.2 Linked data

Linked data is a set of practices for publishing structured data on the Web. It is a way of structuring data that identifies entities with Internationalized Resource Identifiers (IRIs) and materializes their relationships as a network of machine-processable data (Ayers 2007, p. 94). IRIs are universal, so that any entity can be identified with a IRI, and have global scope, therefore an IRI can only identify one entity (Berners-Lee 1996). A major manifestation of linked data is the Linking Open Data Cloud (Abele et al. 2017), which maps the web of semantically structured data that spans hundreds of datasets from diverse domains, such as health care or linguistics. In this section we provide a basic introduction to the key aspects of linked data that we built on in this dissertation. A more detailed introduction to linked data in available in Heath and Bizer (2011).

Linked data may be seen as a pragmatic implementation of the so-called semantic web vision. It is based on semantic web technologies. This technology stack is largely built upon W3C standards.4 The fundamental standards of the semantic web technology stack, which are used throughout our work, are the Resource Description Framework (RDF), RDF Schema (RDFS), and SPARQL.

1.4.2.1 RDF

RDF (Cyganiak et al. 2014) is a graph data format for exchanging data on the Web. The formal data model of RDF is a directed labelled multi-graph. Nodes and edges in RDF graphs are called resources. Resources can be either IRIs, blank nodes, or literals. IRIs from the set \(I\) refer to resources, blank nodes from the set \(B\) reference resources without intrinsic names, and literals from the set \(L\) represent textual values. \(I\), \(B\), and \(L\) are pairwise disjoint sets. An RDF graph can be decomposed into a set of statements called RDF triples. An RDF triple can be defined as \((s, p, o) \in (I \cup B) \times I \times (I \cup B \cup L)\). In such triple, \(s\) is called subject, \(p\) is predicate, and \(o\) is object. As the definition indicates, subjects can be either IRIs or blank nodes, predicates can be only IRIs, and objects can be IRIs, blank nodes, or literals. Predicates are also often referred to as properties. RDF graphs can be grouped into RDF datasets. Each graph in an RDF dataset can be associated with a name \(g \in (I \cup B)\). RDF datasets can be thus decomposed into quadruples \((s, p, o, g)\), where \(g\) is called named graph.

What we described above is the abstract syntax of RDF. In order to be able to exchange RDF graphs and datasets, a serialization is needed. RDF can be serialized into several concrete syntaxes, including Turtle (Beckett et al. 2014), JSON-LD (Sporny et al. 2014), or N-Quads (Carothers 2014). An example of data describing a public contract serialized in the Turtle syntax is shown in Listing 1.

Listing 1: Example data in Turtle

@prefix contract: <http://linked.opendata.cz/resource/isvz.cz/contract> .

@prefix dcterms: <http://purl.org/dc/terms/> .

@prefix pc: <http://purl.org/procurement/public-contracts#> .

contract:60019151 a pc:Contract ;

dcterms:title "Poskytnutí finančního úvěru"@cs,

"Financial loan provision"@en ;

pc:contractingAuthority business-entity:CZ00275492 .1.4.2.2 RDF Schema

RDFS (Brickley and Guha 2014) is an ontological language for describing semantics of data. It provides a way to group resources as instances of classes and describe relationships among the resources. RDFS terms are endowed with inference rules that can be used to materialize data implied by the rules. Relationships between RDF resources are represented as properties. Properties are defined in RDFS in terms of their domain and range. For each RDF triple with a given property, its subject may be inferred to be an instance of the property’s domain, while its object is treated as an instance of the property’s range. Moreover, RDFS can express subsumption hierarchies between classes or properties. If more sophisticated ontological constraints are required, they can be defined by the Web Ontology Language (OWL) (W3C OWL Working Group 2012). RDFS and OWL can be used in tandem to create vocabularies that provide classes and properties to describe data. Vocabularies enable tools to operate on datasets sharing the same vocabulary without dataset-specific adaptations. The explicit semantics provided by RDF vocabularies makes datasets described by such vocabularies machine-understandable to a limited extent. For example, we use the Public Contracts Ontology, described in Section 2.1.1, for this purpose in our work.

1.4.2.3 SPARQL

SPARQL (Harris and Seaborne 2013) is a query language for RDF data. The syntax of SPARQL was inspired by SQL. The WHERE clauses in SPARQL specify graph patterns to match in the queried data. The syntax of graph patterns extends the Turtle RDF serialization with variables, which are names prefixed either by ? or $. Matches of graph patterns can be further restricted by FILTER constaints that evaluate boolean expressions on RDF terms, such as by testing ranges of numeric literals or asserting required language tags of string literals. Solutions to SPARQL queries are subgraphs that match the specified graph patterns. The solutions are subsequently processed by modifiers, such as by deduplication or ordering. Solutions are output based on the query type. ASK queries output boolean values, SELECT queries output tabular data, and CONSTRUCT or DESCRIBE queries output RDF graphs. An example SPARQL query that retrieves all classes instantiated in a dataset and ordered alphabetically is shown in Listing 2.

Listing 2: Example SPARQL query

SELECT DISTINCT ?class

WHERE {

[] a ?class .

}

ORDER BY ?class1.4.2.4 Linked data principles

Use of the above-mentioned semantic web technologies for publishing linked data is guided by four principles (Berners-Lee 2009):

- Use IRIs as names for things.

- Use HTTP IRIs so that people can look up those names.

- When someone looks up a IRI, provide useful information, using the standards (RDF, SPARQL).

- Include links to other IRIs, so that they can discover more things.

Besides prescribing the way to identify resources, the principles describe how to navigate linked data. The principles invoke the mechanism of dereferencing, by which an HTTP request to a resource’s IRI should return the resource’s description in RDF.

Linked data invokes several assumptions that have implications for its users. Non-unique name assumption (non-UNA) posits that two names (identifiers) may refer to the same entity unless explicitly stated otherwise. This assumption implies that deduplication may be needed if identifiers are required to be unique. Open world assumption (OWA) supposes that “the truth of a statement is independent of whether it is known. In other words, not knowing whether a statement is explicitly true does not imply that the statement is false” (Hebeler et al. 2009, p. 103). Due to OWA we cannot infer that missing statements are false. However, it allows us to model incomplete data. This is useful in matchmaking, where “the absence of a characteristic in the description of a supply or demand should not be interpreted as a constraint” (Di Noia et al. 2007, p. 279). Nonetheless, OWA poses a potential problem for classification tasks in machine learning, because linked data rarely contains explicit negative examples (Nickel et al. 2012, p. 272). The principle of Anyone can say anything about anything (AAA) assumes that the open world of linked data provides no guarantees that the assertions published as linked data are consistent or uncontradictory. Given this assumption, quality assessment followed by data pre-processing is typically required when using linked data.

1.4.2.5 Benefits of linked data for matchmaking

Having considered the characteristics of linked data we may highlight its advantages. Many of these advantages are related to data preparation, which we point out in Section 2, however, linked data can also benefit matchmaking in several ways. This overview draws upon the benefits of linked data for recommender systems identified in related research (Di Noia et al. 2014, 2016), since these benefits apply to matchmaking too.

Unlike textual content, linked data is structured, so there is less need for structuring it via content analysis. RDF gives linked data not only its structure but also a flexibility to model diverse kinds of data. Both content and preferences in recommender systems or matchmaking, such as contract awards in our case, can be expressed in RDF in an uniform way in the same feature space, which simplifies operations on the data. Moreover, the common data model enables combining linked data with external linked datasets that can provide valuable additional features. The mechanism of tagging literal values with language identifiers also makes for an easy representation of multilingual data, such as in the case of cross-country procurement in the EU.

The features in RDF are endowed with semantics originating in RDF vocabularies. The explicit semantics makes the features more telling, as opposed to features produced by shallow content analysis (Jannach et al. 2010, p. 75), such as keywords. While traditional recommender systems are mostly unaware of the semantics of the features they use, linked data features do not have to be treated like black boxes, since their expected interpretations can be looked up in the corresponding RDF vocabularies that define the features.

If the values of features are resources compliant with the linked data principles, their IRIs can be dereferenced to obtain more features from the descriptions of the resources. In this way, linked data allows to automate the retrieval of additional features. IRIs of linked resources can be automatically crawled to harvest contextual data. Furthermore, crawlers may recursively follow links in the obtained data. The links between datasets can be used to provide cross-domain recommendations. In such scenario, preferences from one domain can be used to predict preferences in another domain. For example, if in our case we combine data from business and public procurement registers, we may leverage the links between business entities described with concepts from an economic classification to predict their associations to concepts from a procurement classification. If there is no overlap between the resources from the combined datasets, there may be at least an overlap in the RDF vocabularies describing the resources (Heitmann and Hayes 2016), which provide broader conceptual associations.

1.5 Public procurement domain

Our work targets the domain of public procurement. In particular, we apply the developed matchmaking methods to data describing the Czech public procurement. Public procurement is the process by which public bodies purchase products or services from companies. Public bodies make such purchases in public interest in order to pursue their mission. For example, public procurement can be used for purchases of drugs in hospitals, cater for road repairs, or arrange supplies of electricity. Bodies issuing public contracts, such as ministries or municipalities, are referred to as contracting authorities. Companies competing for contract awards are called bidders. Since public procurement is a legal domain, public contracts are legally enforceable agreements on purchases financed from public funds. Public contracts are publicized and monitored by contract notices. Contract notices announce competitive bidding for the award of public contracts (Distinto et al. 2016, p. 14) and update the progress of public contracts as they go through their life cycle, ending either in completion or cancellation. In our case we deal with public contracts that can be described more precisely as proposed contracts (Distinto et al. 2016, p. 14) until they are awarded and agreements with suppliers are signed. We use the term “public contract” as a conceptual shortcut to denote the initial phase of contract life-cycle.

Public procurement is an uncommon domain for recommender and matchmaking systems. Recommender systems are conventionally used in domains of leisure, such as books, movies, or music. In fact, the “experiment designs that evaluate different algorithm variants on historical user ratings derived from the movie domain form by far the most popular evaluation design and state of practice” (Jannach et al. 2010, p. 175) in recommender systems. Our use case thereby constitutes a rather novel application of these technologies.

Matchmaking in public procurement can be framed in its legal and economic context.

1.5.1 Legal context

Public procurement is a domain governed by law. We are focused on the Czech public procurement, for which there are two primary sources of relevant law, including the national law and the EU law. Public procurement in the Czech Republic is governed by the act no. 2016/134 (Czech Republic 2016). Czech Republic, as a member state of the European Union, harmonises its law with EU directives, in particular the directives 2014/24/EU (EU 2014a) and 2014/25/EU (EU 2014b) in case of public procurement. The first directive regulates public procurement of works, supplies, or services, while the latter one regulates public procurement of utilities, including water, energy, transport, and postal services. The act no. 2016/134 transposes these directives into the Czech legislation. Besides legal terms and conditions to harmonize public procurement in the EU member states, these directives also define standard forms for EU public procurement notices,5 which constitute a common schema of public notices. The directives design Tenders Electronic Daily (“Supplement to the Official Journal”)6 to serve as the central repository of public notices conforming to the standard forms.

In an even broader context, the EU member states adhere to the Agreement on Government Procurement (GPA)7 set up by the World Trade Organization. GPA mandates the involved parties to observe rules of fair, transparent, and non-discriminatory public procurement. In this way, the agreement sets basic expectations facilitating international public procurement.

Legal regulation of public procurement has important implications for matchmaking, including explicit formulation of demands, their proactive disclosure, desire for conformity, and standardization. Public procurement law requires explicit formulation of demands in contract notices to ensure a basic level of transparency. In most markets only supply is described explicitly, such as through advertising, while demand is left implicit. Since matchmaking requires demands to be specified, public procurement makes for a suitable market to apply the matchmaking methods.

There is a legal mandate for proactive disclosure of contract notices. Public contracts that meet the prescribed minimum conditions, including thresholds for the amounts of money spent, must be advertised publicly (Graux and Tom 2012, p. 7). Moreover, since public contracts in the EU are classified as public sector information, they fall within the regime of mandatory public disclosure under the terms of the Directive on the re-use of public sector information (EU 2013). In theory, this provides equal access to contract notices for all members of the public without the need to make requests for the notices, which in turn helps to enable fair competition in the public procurement market. In practice, the disclosure of public procurement data is often lagging behind the stipulations of law.

Overall, public procurement is subject to stringent and complex legal regulations. Civil servants responsible for public procurement therefore put a strong emphasis on legal conformance. Moreover, contracting authorities strive at length to make evaluation of contract award criteria incontestable in order to avoid protracted appeals of unsuccessful bidders that delay realization of contracts. Consequently, representatives of contracting authorities may exhibit high risk aversion and desire for conformity at the cost of compromising economical advantageousness. For example, the award criterion of lowest price may be overused because it decreases the probability of an audit three times, even though it often leads to inefficient contracts, as observed for the Czech public procurement by Nedvěd et al. (2017). Desire for conformity can explain why not deviating from defaults or awarding popular bidders may be perceived as a safe choice. In effect, this may imply there is less propensity for diversity in recommendations produced via matchmaking. On the one hand, matchmaking may address this by trading in improved accuracy for decreased diversity in matchmaking results. On the other hand, it may intentionally emphasize diversity to offset the desire for conformity.

Finally, legal regulations standardize the communication in public procurement. Besides prescribing procedures that standardize how participants in public procurement communicate, it standardizes the messages exchanged between the participants. Contracting authorities have to disclose public procurement data following the structure of standard forms for contract notices. The way in which public contracts are described in these forms is standardized to some degree via shared vocabularies and code lists, such as the Common Procurement Vocabulary (CPV) or the Nomenclature of Territorial Units for Statistics (NUTS). Standardization is especially relevant in the public sector, since it is characterized by “a variety of information, of variable granularity and quality created by different institutions and represented in heterogeneous formats” (Euzenat and Shvaiko 2013, p. 12).

Standardization of data contributes to defragmentation of the public procurement market. Defragmentation of the EU member states’ markets is the prime goal of the EU’s common regulatory framework. It aims to create a single public procurement market that enables cross-country procurement among the member states. Standardization simplifies the reuse of public procurement data by third parties, such as businesses or supervisory public bodies. Better reuse of data balances the information asymmetries that fragment the public procurement market.

Nevertheless, public procurement data is subject to imperfect standardization, which introduces variety in it. The imperfect standardization is caused by divergent transpositions of EU directives into the legal regimes of EU member states, lack of adherence to standards, underspecified standards leaving open space for inconsistencies, or meagre incentives and sanctions for abiding by the standards and the prescribed practices. Violations of the prescribed schema, lacking data validation, and absent enforcement of the mandated practices of public disclosure require a large effort from those wanting to make effective use of the data. For example, tasks such as search in aggregated data or establishing the identities of economic operators suffer from data inconsistency. Moreover, public procurement data can be distributed across disparate sources providing varying level of detail and completeness, such as in public profiles of contracting authorities and central registers. Fragmentation of public procurement data thus requires further data integration in order for the consumer to come to a unified view of the procurement domain that is necessary for conducting fruitful data analyses. In fact, one of the reasons why the public procurement market is dominated by large companies may be that they, unlike small and medium-sized enterprises, can afford the friction involved in processing the data.

According to our approach to data preparation, linked data provides a way to compensate the impact of imperfect standardization. While a standard can be defined as “coordination mechanism around non-proprietary knowledge that organizes and directs technological change” (Gosain 2003, p. 18), linked data enables to cope with insufficient standardization by allowing for “cooperation without coordination” (Wood 2011, p. 5) or without centralization. Instead, linked data bridges local heterogeneities via the flexible data model of RDF and explicit links between the decentralized data sources. We describe our use of linked data in detail in Section 2.

1.5.2 Economic context

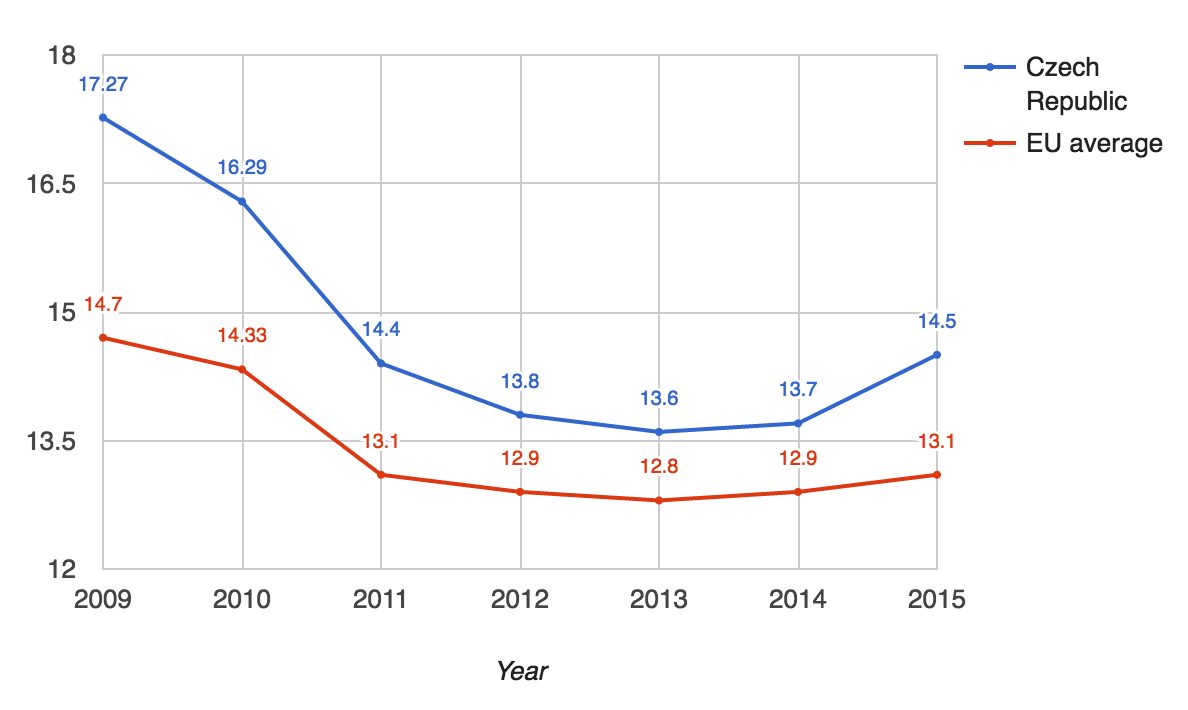

Public procurement constitutes a large share of the volume of transactions in the economy. The share of expenditures in the EU member states’ public procurement on works, goods, and services (excluding utilities) was estimated to be “13.1 % of the EU GDP in 2015, the highest value for the last 4 years” (European Commission 2016). This estimate amounted to 24.2 billion EUR in 2015 in the Czech Republic, which translated to 14.5 % of the country’s GDP (European Commission 2016). Compared with the EU, the Czech Republic exhibits consistent above-average values of this indicator, as can be seen in Fig. 1.

The large volume of transactions in public procurement gives rise to economies of scale, so that even minor improvements can accrue substantial economic impact, since the scale of operations in this domain provides ample opportunity for cost savings. Publishing open data on public procurement as well as using matchmaking methods can be considered among the examples of such improvements, which can potentially increase the efficiency of resource allocation in the public sector, as mentioned in Section 1.4.1.

Due to the volume of the public funds involved in public procurement, it is prone to waste and political graft. Wasteful spending in public procurement can be classified either as active waste, which entails benefits to the public decision maker, or as passive waste, which does not benefit the decision maker. Whereas active waste may result from corruption or clientelism, passive waste proceeds from inefficiencies caused by the lack of skills or incentives. Although active waste is widely perceived to be the main problem of public procurement, a study of the Italian public sector (Bandiera et al. 2009, p. 1282) observed that 83 % of uneconomic spending in public procurement can be attributed to passive waste. We therefore decided to focus on optimizing public procurement where most impact can be expected. We argue that matchmaking can help improve the public procurement processes cut down passive waste. It can assist civil servants by providing relevant information, thus reducing the decision-making effort related to public procurement processes. We identified several use cases in public procurement where matchmaking can help.

1.5.3 Use cases for matchmaking

Matchmaking covers the information phase of market transaction (Schmid and Lindemann 1998, p. 194) that corresponds to the preparation and tendering stages in public procurement life-cycle (Nečaský et al. 2014, p. 865). During this phase “participants to the market seek potential partners” (Di Noia et al. 2004), so that public bodies learn about relevant bidders and companies learn about relevant open calls. In this sense, demands for products and services correspond to information needs and the aim of matchmaking is to retrieve the information that will satisfy them. Several use cases for matchmaking follow from the public procurement legislation according to the procedure types chosen for public contracts, such as:

- Matching bidders to suitable contracts to apply for in open procedures

- Matching relevant bidders that contracting authorities can approach in closed procedures

- Matching similar contracts to serve as models for a new contract

The following use cases are by no means intended to be comprehensive. They illustrate the typical situations in which matchmaking can be helpful.

Public procurement law defines types of procedures that govern how contracting authorities communicate with bidders. In particular, procedure types determine what data on public contracts is published, along with specifying who has access to it and when it needs to be made available. The procedure types can be classified either as open or as restricted. Open procedures mandate contracting authorities to disclose data on contracts publicly, so that any bidders can respond with offers. In this case, contracting authorities do not negotiate with bidders and contracts are awarded solely based on the received bids. Restricted procedures differ by including an extra screening step. As in open procedures, contracting authorities announce contracts publicly, but bidders respond with expression of interest instead of bids. Contracting authorities then screen the interested bidders and send invitations to tender to the selected bidders.

The chosen procedure type determines for which users is matchmaking relevant. Bidders can use matchmaking both in case of open and restricted procedures to be alerted about the current business opportunities in public procurement that are relevant to them. Contracting authorities can use matchmaking in restricted procedures to get recommendations of suitable bidders. Moreover, in case of the simplified under limit procedure, which is allowed in the Czech Republic for public contracts below a specified financial threshold, contracting authority can approach bidders directly. In such case, at least five bidders must be approached according to the act no. 2016/134 (Czech Republic 2016). In that scenario, matchmaking can help recommend appropriate bidders to interest in the public contract. There are also other procedure types, such as innovation partnership, in which matchmaking is applicable to a lesser extent.

An additional use case for similarity-based retrieval employed by matchmaking may occur during contract specification. The Czech act no. 2016/134 (Czech Republic 2016) suggests contracting authorities to estimate contract price based on similar contracts. In order to address this use case, based on incomplete descriptions of contracts matchmaking can recommend similar contracts, the actual prices of which can help estimate the price of the formulated contract.

1.6 Matchmaking

Matchmaking is an information retrieval task that ranks pairs of demands and offers according to the degree to which the offer satisfies the demand. It is a “process of searching the space of possible matches between demand and supplies” (Di Noia et al. 2004, p. 9). For example, matchmaking can pair job seekers with job postings, discover suitable reviewers for doctoral theses, or match romantic partners.

Matchmaking recasts either demands or offers as queries, while the rest is treated as data to query. In this setting, “the choice of which is the data, and which is the query depends just on the point of view” (Di Noia et al. 2004). Both data describing offers and data about demands can be turned either into queries or into queried data. For example, in our case we may treat public contracts as queries for suitable bidders, or, vice versa, bidder profiles may be recast as preferences for public contracts. Matchmakers are given a query and produce \(k\) results best-fulfilling the query (Di Noia et al. 2007, p. 278). Viewed from this perspective, matchmaking can be considered a case of top-\(k\) retrieval.

Matchmaking typically operates on complex data structures. Both demands and supplies may combine non-negotiable restrictions with more flexible requirements or vague semi-structured descriptions. Descriptions of demands and offers thus cannot be reduced to a single dimension, such as a price tag. Matchmakers operating on such complex data often suffer from the curse of dimensionality. It implies that linear increase in dimensionality may cause an exponential growth of negative effects. Complex descriptions make demands and offers difficult to compare. Since demands and supplies are usually complex, “most real-world problems require multidimensional matchmaking” (Veit et al. 2001). For example, matchmaking may involve similarity functions that aggregate similarities of individual dimensions.

Our work focuses on semantic matchmaking that requires a semantic level of agreement between offers and demands. In order to be able to compare descriptions of offers or demands, they need to share the same semantics (González-Castillo et al. 2001). Semantic matchmaking thus describes both queries and data “with reference to a shared specification of a conceptualization for the knowledge domain at hand, i.e., an ontology” (Di Noia et al. 2007, p. 270). Ontologies give the descriptions of entities involved in matchmaking comparable schemata. Data pre-processing may reformulate demands and offers to be comparable, e.g., by aligning their schemata. In order to be able to leverage the semantic features of data, our approach can be thus regarded as schema-aware, as opposed to schema-agnostic matchmaking

Matchmaking overlaps with recommender systems in many respects. Both employ similar methods to achieve their task. However, “every recommender system must develop and maintain a user model or user profile that, for example, contains the user’s preferences” (Jannach et al. 2010, p. 1). Instead of using user profiles, matchmaking uses queries. Although this is a simplifying description and the distinction between matchmaking and recommender systems is in fact blurry, designating our work as matchmaking is more telling.

Besides the similarities with recommender systems, matchmaking may invoke different connotations, as the term is used in other disciplines that imbue it with different meanings. For instance, it appears in graph theory naming the task of producing subsets of edges without common vertices. To avoid this ambiguity, in this text we will use the term “matchmaking” only in the way described here.

We adapted two general approaches for matchmaking: case-based reasoning and statistical relational learning. Both have many things in common and employ similar techniques to achieve their goal. Both learn from past data to produce predictions that are not guaranteed to be correct. A more detailed comparison of case-based reasoning with machine learning is in Richter and Weber (2013, p. 531).

1.6.1 Case-based reasoning

Case-base reasoning (CBR) is a problem solving methodology that learns from experiences of previously solved problems, which are called cases (Richter and Weber 2013, p. 17). A case consists of a problem specification and a problem solution. Experiences described in cases can be either positive or negative. Positive experiences propose solutions to be reused, whereas the negative ones indicate solutions to avoid. For example, experiences may concern diagnosing a patient and evaluating the outcome of the diagnosis, which may be either successful or unsuccessful. Cases are stored and organized in a case base, such as a database. Case base serves as a memory that retains the experiences to learn from.

The workings of CBR systems can be described in terms of the CBR cycle (Kolodner 1992). The cycle consists of four principal steps a CBR system may iterate through:

- Retrieve

- Reuse

- Revise

- Retain

In the Retrieve step a CBR system gets cases similar to the query problem. Case bases are thus usually built for efficient similarity-based retrieval. Since descriptions of cases are often complex, computing their similarity may involve determining and weighting similarities of their individual features. For each use case and each feature a different similarity measure may be adopted, which allows to use pairwise similarity metrics tailored for particular kinds of data. This also enables assigning each feature a different weight, so that more relevant features may be emphasized. The employed metrics may be either symmetric or asymmetric. For example, we can use an asymmetric metric to favour lower prices over higher prices, even though their distance to the price in the query is the same. Since the similarity metrics allow fuzzy matches, reasoning in CBR systems is approximate. Consequently, as Richter and Weber argue, the characteristic that distinguishes CBR from deductive reasoning in logic or databases is that “it does not lead from true assumptions to true conclusions” (2013, p. 18).

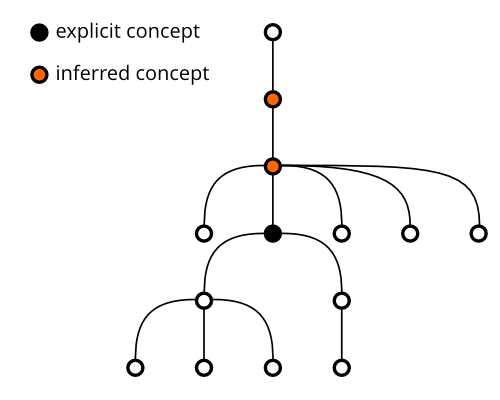

A key feature of CBR is that similarity computation typically requires background knowledge. While similarity of cardinal features can be determined without it, nominal features call for additional knowledge to assess their degree of similarity. For instance, a taxonomy may be used to compute similarity as the inverse of taxonomic distance between the values of the compared feature. Since hand-coding background knowledge is expensive, and typically requires assistance of domain experts, CBR research considered alternatives for knowledge acquisition, such as using external semantics from linked open data or discovering latent semantics via machine learning.

The retrieved nearest neighbour cases serve as potential sources of a solution to the query problem. Solutions of these cases are copied and adapted in the Reuse step to formulate a solution answering the query. If a solved case matches the problem at hand exactly, we may directly reuse its solution. However, exact matches are rare, so the solutions to matching cases often need to be adapted. For example, solutions may be reused at different levels. We may either reuse the process that generated the solution, reuse the solution itself, or do something in between.

The reused solution is evaluated in the Revise step to assess whether it is applicable to the query problem. Without this step a CBR system cannot learn from its mistakes. It is the step in which CBR may add user feedback.

Finally, in the Retain step, the query problem and its revised and adopted solution may be incorporated in the case base as a new case for future learning. Alternatively, the generated case may be discarded if the CBR system stores only the actual cases.

The CBR cycle may be preceded by preparatory steps described by Richter and Weber (2013). A CBR system can be initialized by the Knowledge representation step, which structures the knowledge contained in cases the system learns from. Cases are explicitly formulated and described in a structured way, so that their similarity can be determined effectively. The simplest representation of a case is a set of feature-value pairs. However, using more sophisticated data structures is common. In order to compute similarity of cases, they must be described using comparable features, or, put differently, the descriptions of cases must adhere to the same schema.

Problem formulation is a preliminary step in which a query problem is formulated. A query can be considered a partially specified case. It may be either underspecified, such that it matches several existing cases, or overspecified, if it has no matches due to being too specific. Underspecified queries may require solutions from the matching cases to be combined, while overspecified queries may need to be relaxed or provided with partial matches.

Overall, the CBR cycle resembles human reasoning, such as problem solving by finding analogies. In fact, the CBR research is rooted in psychology and cognitive science. It is also similar to case law, which reasons from precedents to produce new interpretations of law. Thanks to these similarities, CBR is perceived as natural by its users (Kolodner 1992), which makes its function usually easy to explain.

CBR is commonly employed in recommender systems. Case-based recommenders are classified as a subset of knowledge-based recommenders (Jannach et al. 2010). Similarly to collaborative recommendation approaches, case-based recommenders exploit data about past behaviour. However, unlike collaborative recommenders, “the case-based approach enjoys a level of transparency and flexibility that is not always possible with other forms of recommendation” (Smyth 2007, p. 370), since it is based on reasoning with explicit knowledge. Our adaptation of CBR for matchmaking can be thus considered a case-based recommender.

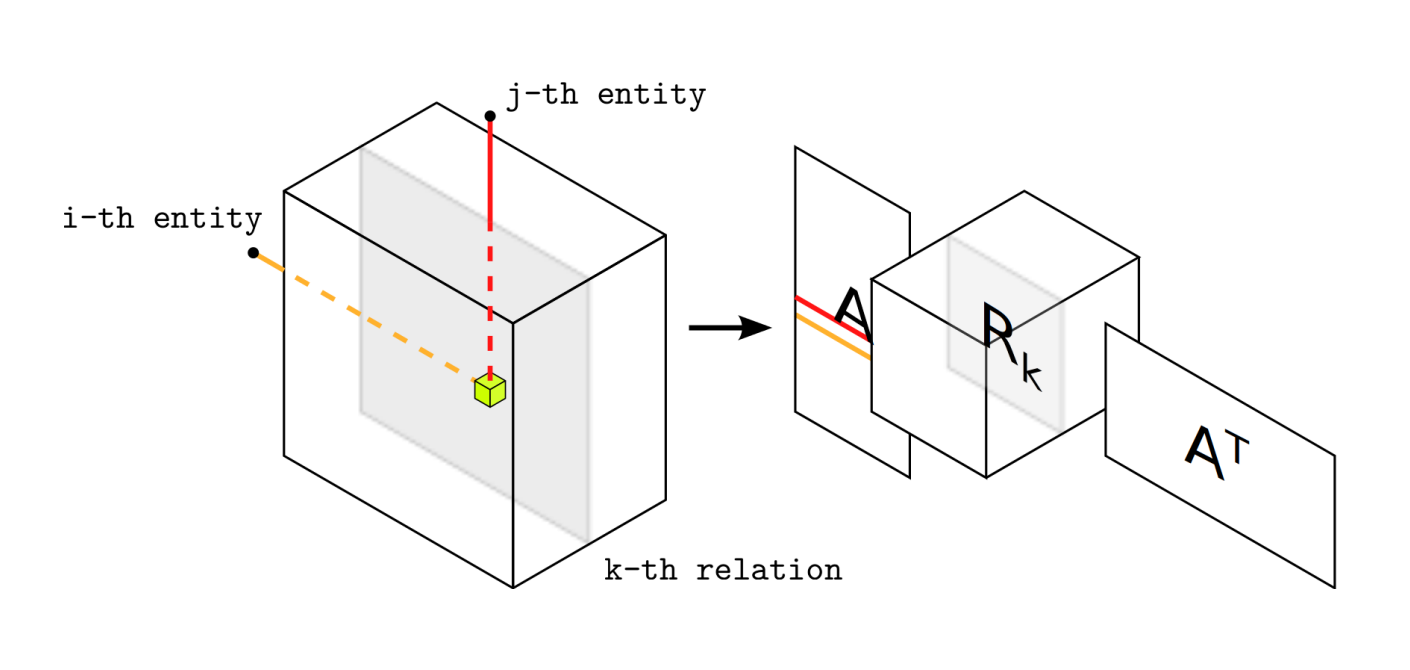

1.6.2 Statistical relational learning

Statistical relational learning (SRL) is a subfield of machine learning that is concerned with learning from relational data. SRL learns models that “describe probability distributions \(P(\{X\})\) over the random variables in a relational domain” (Tresp and Nickel 2014, p. 1554). Here, \(X\) denotes a random variable and \(\{X\}\) refers to a set of such variables in a relational domain. The learned model reflects the characteristic patterns and global dependencies in relational data. Unlike inference rules, these statistical patterns may not be universally true, but have useful predictive power nonetheless. An example of such pattern is homophily (McPherson et al. 2001), which describes the tendency of similar entities to be related. A model created by SRL is used to predict probabilities of unknown relations in data. In other words, in SRL “the underlying idea is that reasoning can often be reduced to the task of classifying the truth value of potential statements” (Nickel et al. 2012, p. 271).

There are two basic kinds of SRL models: models with observable features and models with latent features. Our work focuses on the latent feature models. Unlike observable features, latent features are not directly observed in data. Instead, they are assumed to be the hidden causes for the observed variables. Consequently, results from machine learning based on latent features are usually difficult to interpret. Latent feature models are used to derive relationships between entities from interactions of their latent features (Nickel et al. 2016, p. 17). Since latent features correspond to global patterns in data, they can be considered products of collective learning.

Collective learning “refers to the effect that an entity’s relationships, attributes, or class membership can be predicted not only from the entity’s attributes but also from information distributed in the network environment of the entity” (Tresp and Nickel 2014, p. 1550). It involves “automatic exploitation of attribute and relationship correlations across multiple interconnections of entities and relations” (Nickel et al. 2012, p. 272). The exploited contextual information propagates through relations in data, so that the inferred dependencies may span across entity boundaries and involve entities that are more distant in a relational graph. Among other things, this feature of collective learning can help cope with modelling artefacts in RDF, such as intermediate resources that decompose n-ary relations into binary predicates.

Collective learning is a distinctive feature of SRL and is particularly manifest in “domains where entities are interconnected by multiple relations” (Nickel et al. 2011). Conversely, traditional machine learning expects data from a single relation, usually provided in a single propositional table. It considers only attributes of the involved entities, which are assumed to be mutually independent. This is one of the reasons that can explain why SRL was demonstrated to be able to produce superior results for relational data when compared to learning methods that do not take relations into account (Tresp and Nickel 2014, p. 1551). These results mark the importance of being able to leverage the relations in data effectively.

Nowadays the relevance of SRL grows as relational data becomes still more prevalent. In fact, many datasets have relational nature. For instance, vast amounts of relational data are produced by social networking sites. Relational data appears in many contexts, including relational databases, ground predicates in first order logic, or RDF.

Using relational datasets is nevertheless challenging, since many of them are incomplete or noisy and contain uncertain or false statements. Fortunately, SRL is relatively robust to inconsistencies, noise, or adversarial input, since it utilizes non-deterministic dependencies in data. Yet it is worth noting that even though SRL usually copes well with faulty data, systemic biases in the data will manifest in biased results produced by this method.

LOD is a prime example of a large-scale source of relational data afflicted with the above-mentioned ills. The open nature of LOD has direct consequences for data inconsistency and noisiness. These consequences make LOD challenging for reasoning and querying. While SRL can overcome these challenges to some extent, they pose a massive hurdle for traditional reasoning using inference based on description logic. Logical inference imposes strict constraints on its input, which are often violated in real-world data (Nickel et al. 2016, p. 28):

“Concerning requirements on the input data, it is quite unrealistic to expect that data from the open Semantic Web will ever be clean enough such that classical reasoning systems will be able to draw useful inferences from them. This would require Semantic Web data to be engineered strongly according to shared principles, which not only contrasts with the bottom-up nature of the Web, but is also unrealistic in terms of conceptual realizability: many statements are not true or false, they rather depend on the perspective taken.” (Hitzler and van Harmelen 2010, p. 42)

To compound matters further, reasoning with ontologies is computationally demanding, which makes it difficult to scale to the larger datasets in LOD. While we cannot guarantee most LOD datasets to be sound enough for reasoning based on logical inference, “it is reasonable to assume that there exist many dependencies in the LOD cloud which are rather statistical in nature than deterministic” (Nickel et al. 2012, p. 271). Approximate reasoning by SRL is well-suited to exploit these dependencies and to address the challenges inherent to LOD. This setup enables logical inference to complement SRL where appropriate. For example, results produced by logical inference can serve as gold standard for evaluation of SRL, such as in case of Nickel et al. (2012), who used rdfs:subClassOf inferences to evaluate a classification task.

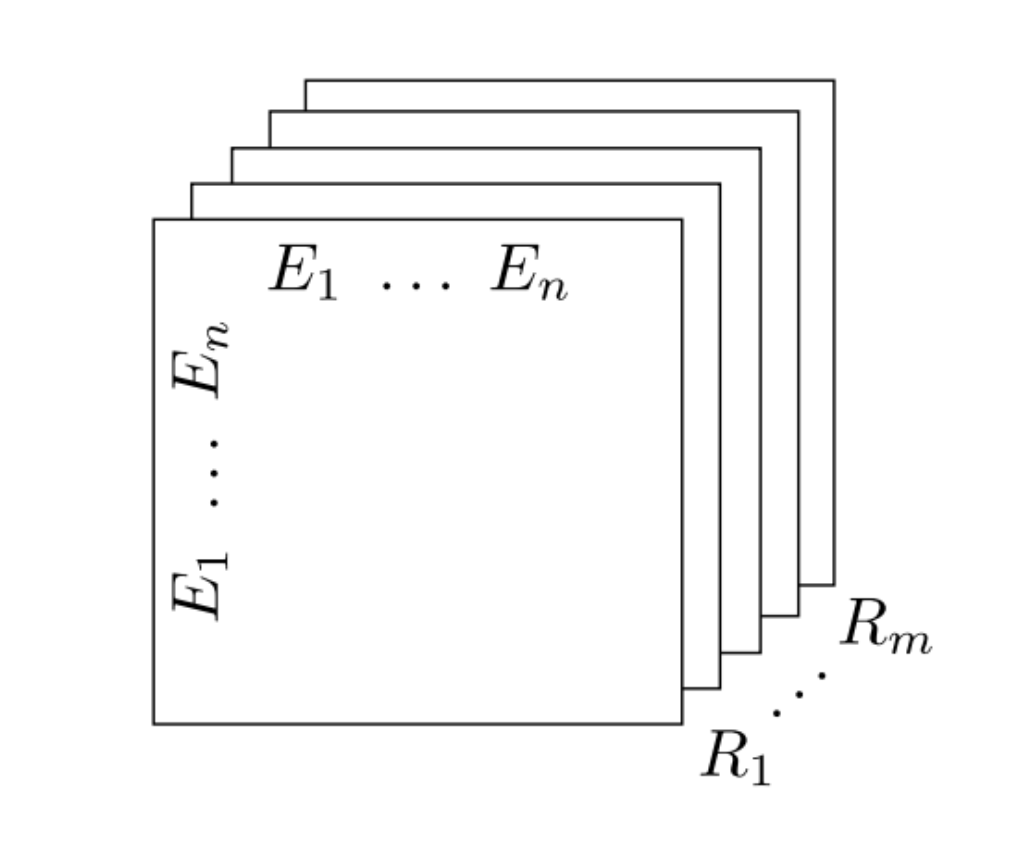

We conceived matchmaking via SRL as a link prediction task. Link prediction is “concerned with predicting the existence (or probability of correctness) of (typed) edges in the graph” (Nickel et al. 2016, p. 14). In the context of knowledge graphs, such as LOD, link prediction is also known as knowledge graph completion (Nickel et al. 2016, p. 14). An example application of link prediction is discovery of protein interactions in bioinformatics. Typical cases of link prediction operate on multi-relational and noisy data, which makes the task suitable for SRL. In our case, we predict the link between a public contract and its awarded bidder.

2 Data preparation

A fundamental part of the hereby presented work is preparation of the Czech public procurement dataset enriched with linked data. The prepared dataset was used to evaluate the matchmakers we built as our main contribution. It served as a use case for applied research in the public procurement domain to explore whether the proposed matchmakers provide useful recommendations in a real-world setting.

In this chapter we will describe the data preparation using the framework of Extract-Transform-Load (ETL) (Kimball and Caserta 2004). ETL is a workflow for data preparation that is guided by the principle of the separation of concerns, as indicated by its compound name. It conceptualizes a sequence of data processing steps endowed with a single main responsibility. Each step is further subdivided into smaller steps endowed with a single responsibility. The self-describing nature of RDF can further contribute to cleaner separation of concerns in the ETL workflow, so that the coupling between the steps involved is reduced.

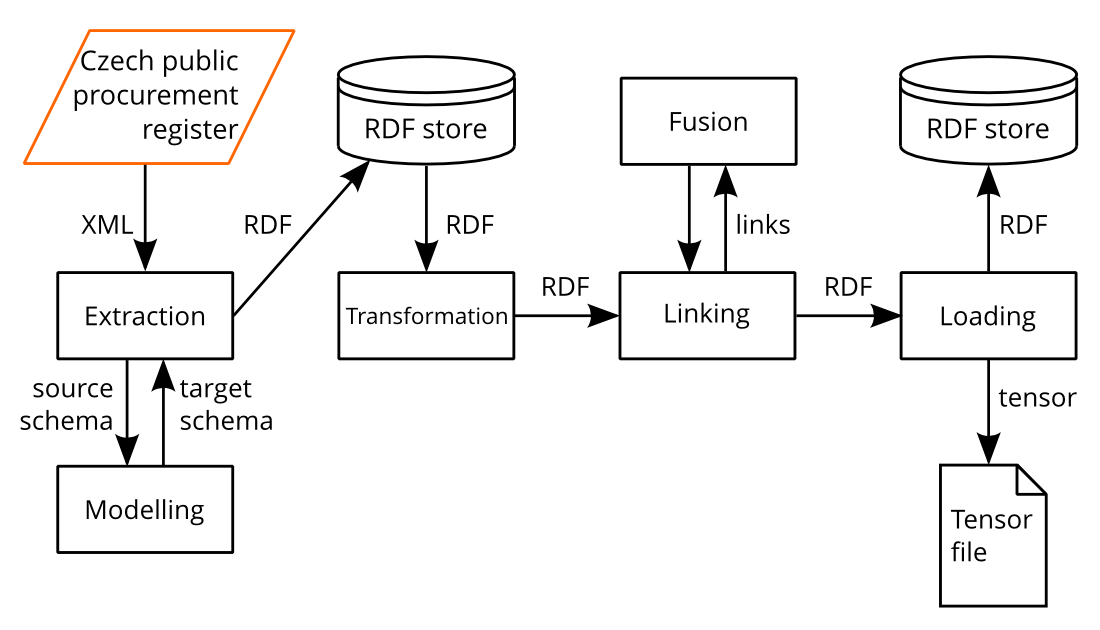

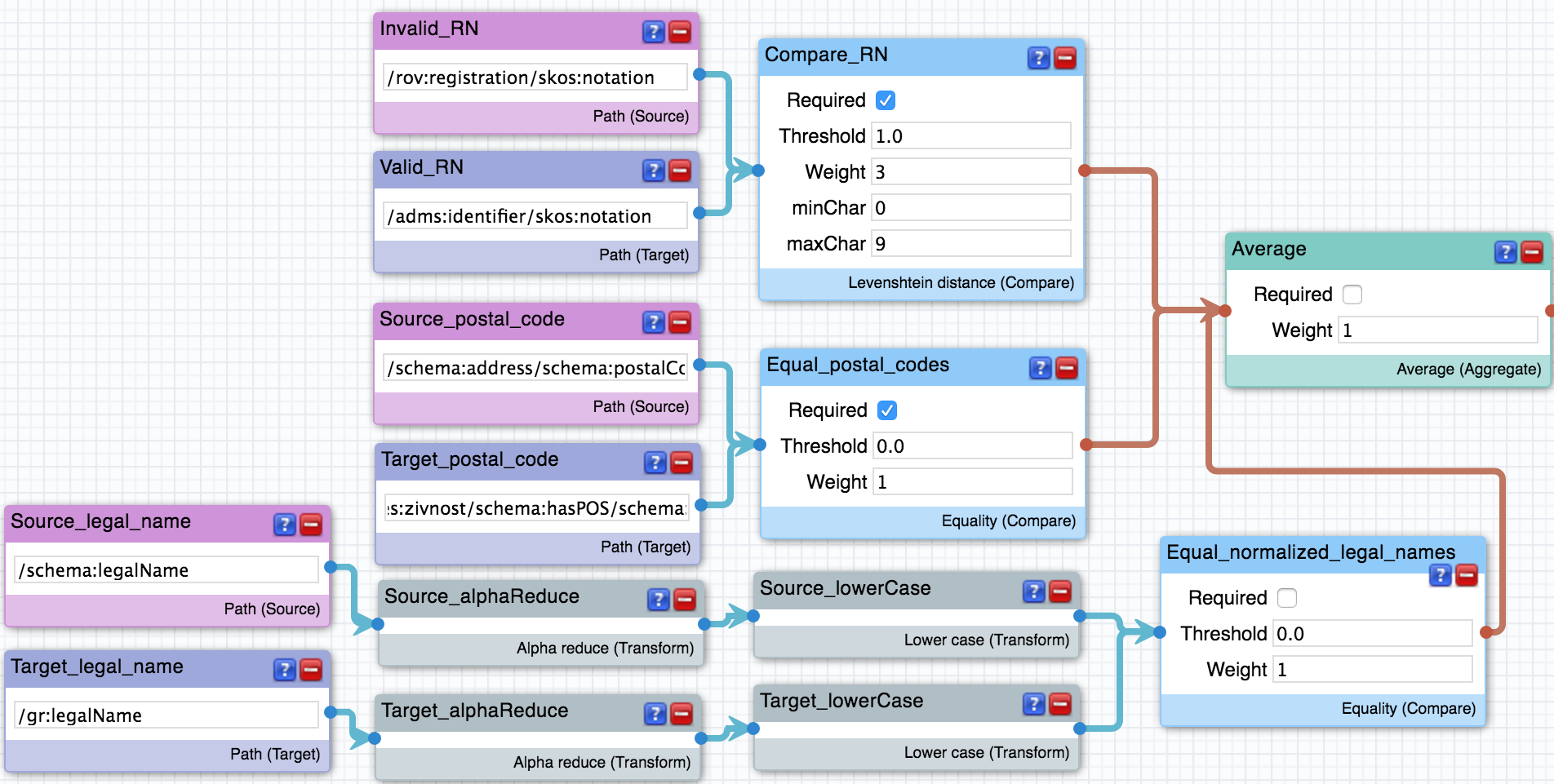

The structure of this chapter roughly follows the steps of ETL. We extend them to modelling, extraction, transformation, linking, fusion, and loading. Modelling produces a target schema, onto which the data is mapped in the course of extraction and transformation. In our setting, extraction refers to the process of converting non-RDF data to RDF. Once data is available in RDF, its processing is described as transformation. Linking discovers co-referent identities, while fusion resolves them to the preferred identities, along with resolving conflicts in data that may arise. Linking and fusion are interleaved and executed iteratively, each building on the results of its previous step. Loading is concerned with making the data available in a way that the matchmaking methods can operate efficiently. The adopted ETL workflow evolved from the workflow that was previously described by this dissertation’s author (2014a). Fig. 2 summarizes the overall workflow.

We employed materialized data integration. Unlike virtual integration, materialized integration persists the integrated data. This allowed us to achieve the query performance required by data transformations and SPARQL-based matchmaking. Our approach to ETL can be regarded as Extract-Load-Transform (ELT). We first loaded the extracted data into an RDF store to make transformation, linking, and fusion via SPARQL Update operations feasible. Using RDF allows to load data first and integrate it later, while in the traditional context of relational databases, data integration typically precedes loading. We used a batch ETL approach, since our source data is published in subsets partitioned per year. Real-time ETL would be feasible if the source data was provided at a finer granularity, such as in the case of the profiles of contracting authorities, which publish XML feeds informing about current public contracts.

Using RDF provides several advantages to data preparation. Since there is no fixed schema in RDF, any RDF data can be merged and stored along with any other RDF data. Merge as union applies to schemas as well, because they too are formalized in RDF. Flexible data model of RDF and the expressive power of RDF vocabularies and ontologies enable to handle variation in the processed data sources. Vocabularies and ontologies make RDF into a self-describing data format. Producing RDF as the output of data extraction provides leverage for the subsequent parts of the ETL process, since the RDF structure allows to express complex operations transforming the data. Moreover, the homogeneous structure of RDF “obsoletes the structural heterogeneity problem and makes integration from multiple data sources possible even if their schemas differ or are unknown” (Mihindukulasooriya et al. 2013). Explicit, machine-readable description of RDF data enables to automate many data processing tasks. In the context of data preparation, this feature of RDF reduces the need for manual intervention in the data preparation process, which decreases its cost and increases its consistency by avoiding human-introduced errors. However, “providing a coherent and integrated view of data from linked data resources retains classical challenges for data integration (e.g., identification and resolution of format inconsistencies in value representation, handling of inconsistent structural representations for related concepts, entity resolution)” (Paton et al. 2012).

Linked data provides a way to practice pay-as-you-go data integration (Paton et al. 2012). The pay-as-you-go principle suggests to reduce costs invested up-front into data preparation, recognize opportunities for incremental refinement of the prepared data, and revise which opportunities to invest in based on user feedback (Paton et al. 2016). The required investment in data preparation is inversely proportional to the willingness of users to tolerate imperfections in data. In our case, we used the feedback from evaluation of matchmaking as an indirect indication of the parts of data preparation that need to be improved.

The principal goal of ETL is to add value to data. A key way to do so is to improve data quality. Since data quality is typically defined as fitness for use, we focus on the fitness of the prepared data for matchmaking in particular. Fitness for this use is affected by several data quality dimensions (Batini and Scannapieco 2006). The key relevant dimensions are duplication and completeness. Lack of duplicate entities reduces the search space that matchmaking has to explore. On the contrary, duplicates break links that can be leveraged by matchmaking. For instance, if there are unknown aliases for a bidder, then data linked from these aliases is unreachable. Incompleteness causes the features potentially valuable for matchmaking to be missing. It makes data less descriptive and increases its sparseness, in turn making matchmaking less effective. However, measuring both these dimensions is difficult. In case of duplication, we can only measure the relative improvement of the deduplicated dataset when compared to the input dataset. Measuring the duplication of the output dataset is unfeasible, since it may contain unknown entity aliases. Similarly, the evaluation of completeness requires either a reference dataset to compare to or reliable cardinalities to expect in the target dataset’s schema. Unfortunately, reference datasets are typically unavailable. Cardinalities of properties either cannot be relied upon or their computation is undermined by unknown duplicates.

While the goals pursued by public disclosure and aggregation of procurement data are often undermined by insufficient data integration caused by heterogeneity of data provided by diverse contracting authorities, ETL can remedy some of the adverse effects of the heterogeneity and fragmentation of public procurement data. However, at many stages of data preparation we needed to compromise data quality due to the effort required to achieve it. We are explicit about the involved trade-offs, because it helps to understand the complexity of the data preparation endeavour. Moreover, for some issues of the data its source does not provide enough to be able to resolve them at all.

Low data quality can undermine both matchmaking as well as data analyses. Data analyses are often based on aggregation queries, which can be significantly skewed by incomplete or duplicate data. Incompleteness of data introduces an involuntary influence of the sampling bias to analyses of such data. For instance, aggregated counts of duplicated entities are unreliable, as they count distinct identifiers instead of counting distinct real-world entities, which may be associated with multiple identifiers. Uncertain quality disqualifies the data from being used scenarios where publishing false positives is not an option. For example, probabilistic hypotheses are of no use for serious journalism, which cannot afford to make possibly untrue claims. Instead, such findings need to be considered as hinting where further exploration to produce more reliable outcomes could be done. On the contrary, in the probabilistic setting of matchmaking even imperfect data can be useful. Moreover, we assume that the impact of errors in data can be partially remedied by the volume of data. Finally, since we follow the pay-as-you-go approach, there is an opportunity to invest more in improving data quality if required.

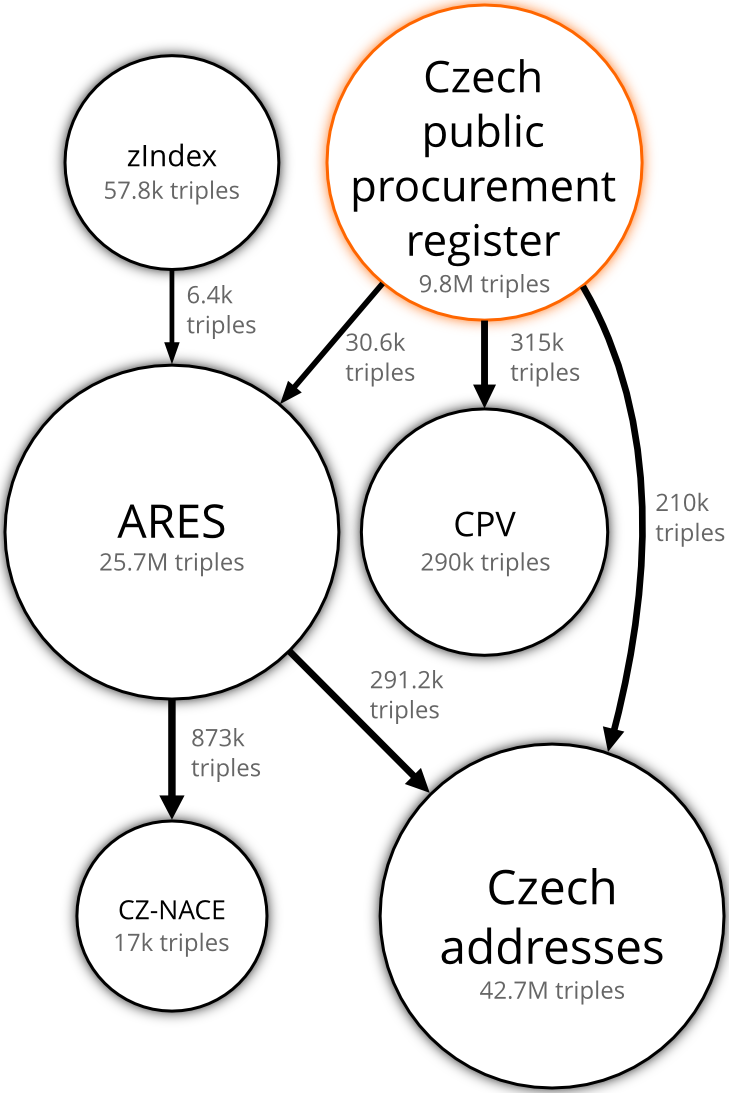

Preparation of the dataset for matchmaking involved several sources. Selection of each of the data sources had a motive justifying the effort spent preparing the data. We selected the Czech public procurement register as our primary dataset, to which we linked the Common Procurement Vocabulary (CPV), Czech address data, Access to Registers of Economic Subjects/Entities (ARES), and zIndex. The Czech public procurement register provides historical data on Czech public contracts since 2006. CPV organizes the objects of public contracts in a hierarchical structure that allows to draw inferences about the similarity of the objects from their distance in the structure of the vocabulary. Czech address data offers geo-coordinates for the reference postal addresses in the Czech Republic. By matching postal addresses to their canonical forms from this dataset, postal addresses can be geocoded. ARES serves as a reference dataset for business entities. We used it to reconcile the identities of business entities in the Czech public procurement data. zIndex provides a fairness score to contracting authorities in the Czech public procurement. ETL of each of these datasets is described in more detail in the following sections.

The Czech public procurement dataset is available at https://linked.opendata.cz/dataset/isvz. The source code used for data preparation is openly available in a code repository.21 This allows others to replicate and scrutinize the way we prepared data. The data preparation tasks were implemented via declarative programming using XSLT, SPARQL Update operations, and XML specifications of linkage rules. The high-level nature of declarative programming made the implementation concise and helped us to avoid bugs by abstracting from lower-level data manipulation. The work on data preparation started already in 2011, which may explain the diverse choices of the employed tools. Throughout the data preparation, as more suitable and mature tools appeared, we adopted them. A reference for the involved software is provided in Appendix 6.

2.1 Modelling

The central dataset that we used in matchmaking is the Czech public procurement register.22 The available data on each contract in this dataset differs, although generally the contracts feature data such as their contracting authority, the contract’s object, award criteria, and the awarded bidder, altogether comprising the primary data for matchmaking demand and supply. As we described previously, viewed from the market perspective, public contracts can be considered as expressions of demand, while awarded tenders express the supply.

Since public procurement often pursues multiple objectives, public contracts are demands with variable degrees of complexity and completeness. Their explicit formulation thus requires sufficiently expressive modelling, making it a fitting use case for the semantic web technologies, including RDF and RDF Schema. Public contracts may stipulate non-negotiable qualification criteria as well as setting desired, but negotiable qualities sought in bidders. The objects of public contracts are often heterogeneous products or services, that cannot be described only in terms of price. Apart from their complex representation, public contracts have many features unavailable as structured data. These features comprise unstructured documentation or undisclosed terms and conditions. Consequently, matchmaking has to operate on simplified models of public contracts.

We described this dataset with a semantic data model. One key goal of modelling this data was to establish a structure that can be leveraged by matchmaking. However, modelling data in RDF is typically agnostic of its expected use. Instead, it is guided by a conceptual model that opens the data to a wide array of ways to reuse the data. Nevertheless, the way we chose to model our data reflected our priorities.

We focused on facilitating querying and data integration via the data model. Instead of enabling to draw logical inferences by reasoning with ontological constructs, we wanted to simplify and speed-up querying. In order to do that, for example, we avoided verbose structures to reduce the size of the queried data. For the sake of better integration with other data, we established IRIs as persistent identifiers and reused common identifiers where possible. Thanks to the schema-less nature of RDF, shared identifiers allowed us to merge datasets automatically.

The extracted public procurement data was described using a mixture of RDF vocabularies, out of which the Public Contracts Ontology was the most prominent.

2.1.1 Public Contracts Ontology

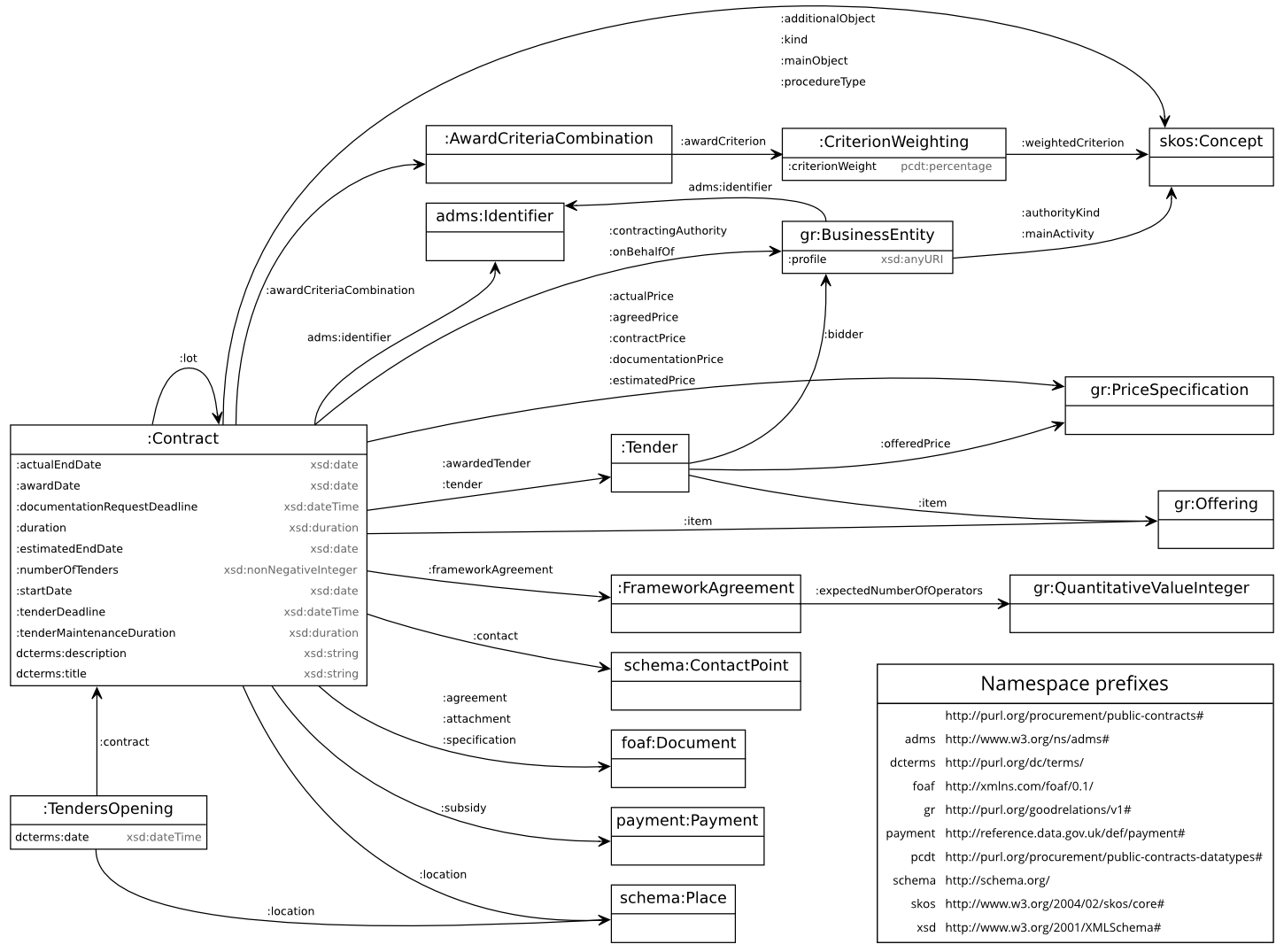

Public Contracts Ontology23 (PCO) is a lightweight RDF vocabulary for describing data about public contracts. The vocabulary has been developed by the Czech OpenData.cz initiative since 2011, while this dissertation’s author has been one of its editors. Its design is driven by what public procurement data is available, mostly in the Czech Republic and at the EU level. The data-driven approach “implies that vocabularies should not use conceptualizations that do not match well to common database schemas in their target domains” (Mynarz 2014b).

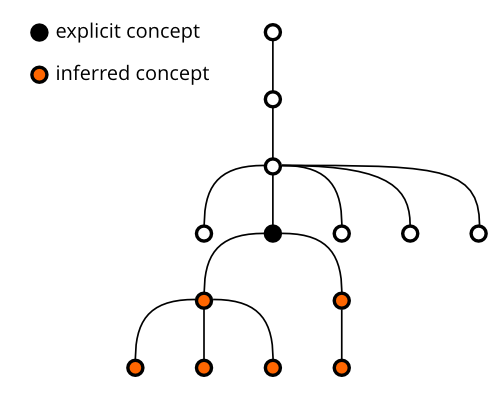

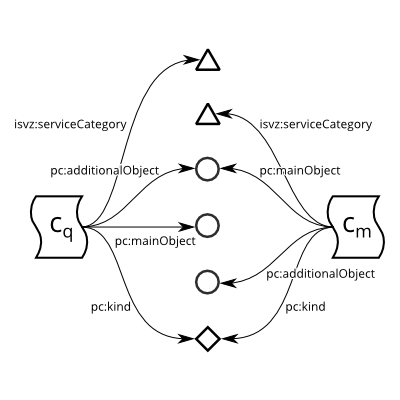

PCO establishes a reusable conceptual vocabulary to provide a consistent way of describing public contracts. This aim for reusability corresponds with the established principle of minimal ontological commitment (Gruber 1993). The vocabulary exhibits a simple snowflake structure oriented around contract as the central concept. It extensively reuses and links other vocabularies, such as Dublin Core Terms24 or GoodRelations.25 While direct reuse of linked data vocabularies is discouraged by Presutti et al. (2016), because it introduces a dependency on external vocabulary maintainers and the consequences of the ontological constraints of the reused terms are rarely considered, we argue that these vocabularies are often maintained by organizations more stable than the organization of the vocabulary’s creator and that the mentioned ontological constraints are typically non-existent in lightweight linked data vocabularies, such as Dublin Core Terms. Several properties in PCO have their range restricted to values enumerated in code lists. For example, there is a code list for procedure types, including open or restricted procedures. These core code lists are represented using the Simple Knowledge Organization System (SKOS) (Miles and Bechhofer 2009) and are a part of the vocabulary. The design of PCO is described in more detail in Klímek et al. (2012) and Nečaský et al. (2014). The class diagram in Fig. 3 shows the Public Contracts Ontology.

The vocabulary was used to a large extent in the LOD2 project.26 For example, it was applied to Czech, British, EU, or Polish public procurement data. In this way, we validated the portability of the vocabulary across various legal environments and ways of publishing public procurement data.

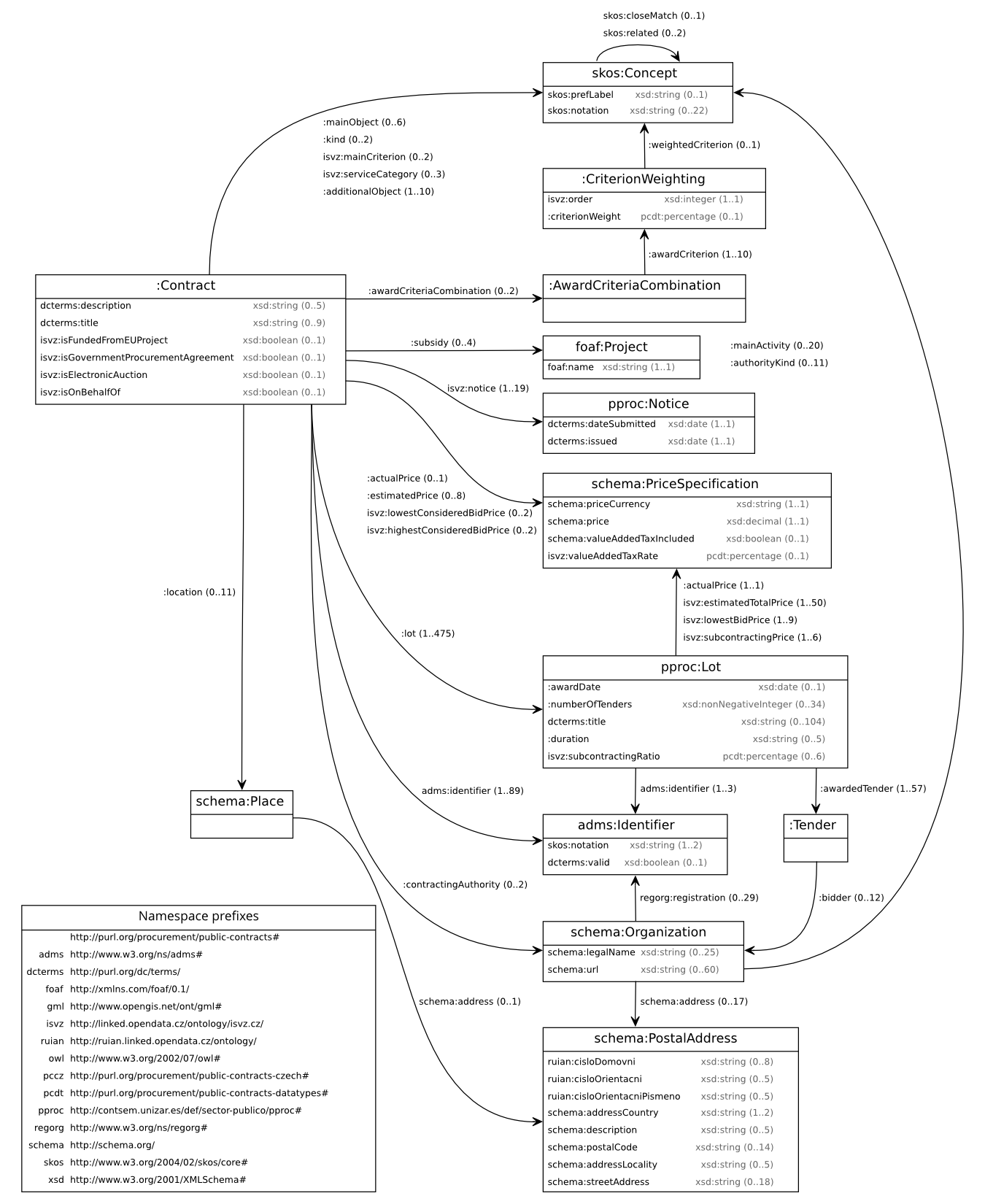

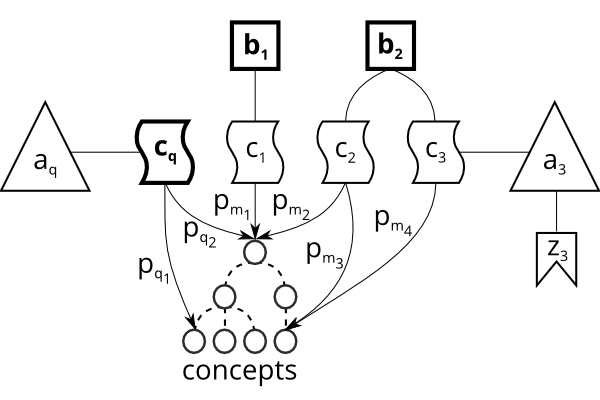

2.1.2 Concrete data model

The concrete data model of the Czech public procurement data uses the PCO mixed with terms cherry-picked from other linked open vocabularies, such as Public Procurement Ontology (PPROC) (Muñoz-Soro et al. 2016), which directly builds upon PCO. The data model’s class diagram is shown in Fig. 4.

The data model of the extracted data departs from PCO in several ways. There are ad hoc terms in the <http://linked.opendata.cz/ontology/isvz.cz/> namespace to represent dataset-specific features of the Czech public procurement register. Some of these terms are intermediate and are subsequently replaced during data transformation.

Contract objects expressed via pc:mainObject and pc:additionalObject are qualified instead of linking CPV directly. A proxy concept that links a CPV concept via skos:closeMatch is created for each contract object to allow qualification by concepts from the CPV’s supplementary vocabulary. The proxy concepts link their qualifier via skos:related. For example, a contract may have Electrical machinery, apparatus, equipment and consumables; lighting (code 31600000) assigned as the main object, which can be qualified by the supplementary concept For the energy industry (code KA16). This custom modelling pattern was adopted, since SKOS does not recommend any way to represent pre-coordination of concepts.27

Data in the Czech public procurement register is represented using notices, such as prior information notices or contract award notices. Notices are documents that inform about changes in the life-cycle of public contracts. Using the terminology of Jacobs and Walsh (2004), notices can be considered information resources describing contracts as non-information resources. Information resource is “a resource which has the property that all of its essential characteristics can be conveyed in a message” (Jacobs and Walsh 2004), so that it can be transferred via HTTP. On the contrary, non-information resources, such as physical objects or abstract notions, cannot be transferred via HTTP.

We represent contract notices as instances of the subclasses of pproc:Notice from PPROC, since PCO does not include the concept of a contract notice. PCO treats notices as mere artefacts of the document-based communication in public procurement. Each notice pertains to a single contract, while a contract may link several notices informing about its life-cycle. Notices thus provide a way to represent the temporal dimension of contracts. They serve as time-indexed snapshots tracking the evolution of contracts, based on the notice type and its timestamp. To a large extent, we treat notices as intermediate resources, the data of which are combined to form a unified view of contracts during data fusion. Nevertheless, in focusing on the central concept of the public contract, this modelling approach glances over the temporal dimension of other data. For example, it does not accommodate expressing that a contracting authority was renamed. Neither is it supported by PCO, which was designed as atemporal, since modelling temporal data remains an open research topic.